bat file that will run once a day: start chrome "" To fully automate this process, I set up a.

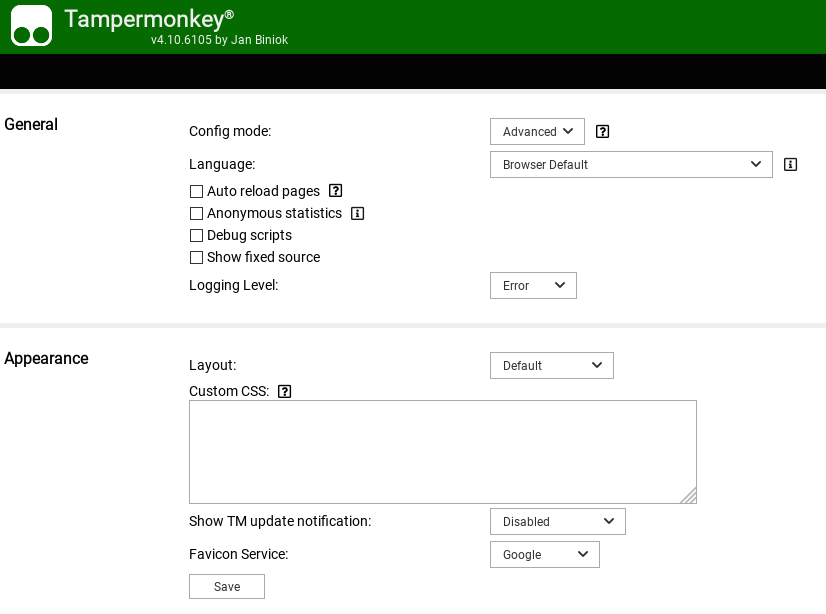

#Tampermonkey run at code

Seven lines of code and I’m scraping the report every time my browser opens the URL. document.getElementById(), document.querySelector(), etc.) to manipulate input fields. I also needed to set some start and end dates before clicking a button, and of course Javascript provides some simple DOM methods (e.g. This works great for me – the report I want to scrape is always at a particular URL. In a nutshell, when you set a URL value in the field, Tampermonkey will execute your script every time Chrome opens that address. My one big complaint about Tampermonkey is that there isn’t an error log built into it, but it’s not a big deal to just run Chrome’s developer tools while you’re building and testing to track any potential bugs. You can even run their built-in syntax checker to ensure that you don’t have any particularly stupid errors. The interface is simple – they provide a template containing some comments/options you can configure and then a Javascript editor where you can drop in your code. Once the extension has been added, you can hover over it and click on the “Create a new script…” option to get started. You visit their extension page in the Chrome Web Store, click the Add To Chrome button, and give the extension some permissions – poof, you’re in business.

The author pivoted from their initial attempts to what they considered to be a more elegant solution – using a Chrome extension called Tampermonkey to run a script on a particular web page, and automating this process with a. But in this blog post I’ll cover the scraping portion, and perhaps I’ll write again when I figure out the rest.Īs I was examining a variety of scraping options I ran into this post over at Stack Overflow:

#Tampermonkey run at how to

I like the idea of having pre-formatted, clean data exported from the site on a schedule – the other half of the battle is figuring out how to move that data into a warehouse. Alternatively, the website offers a CSV export button. The data can be presented in a datatable, but this would require some scripting to run through multiple pages of results, each row being dumped into an output file. I ran into a scenario today where a client needed data scraped from a website, but the website offers no API, cURL, or fetch capabilities as far as I can tell.

0 kommentar(er)

0 kommentar(er)